Songle: A Web Service for Active Music Listening Improved by User Contributions

Members: Kazuyoshi Yoshii, Hiromasa Fujihara, Matthias Mauch, Tomoyasu Nakano, Yuta Kawasaki, and Minoru Sakurai

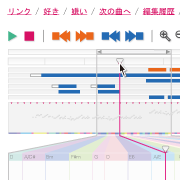

This paper describes a public web service for active music listening, Songle, that enriches music listening experiences by using music-understanding technologies based on signal processing. Although various research-level interfaces and technologies have been developed, it has not been easy to get people to use them in everyday life. Songle serves as a showcase to demonstrate how people can benefit from music-understanding technologies by enabling people to experience active music listening interfaces on the web. Songle facilitates deeper understanding of music by visualizing music scene descriptions estimated automatically, such as music structure, hierarchical beat structure, melody line, and chords. When using music-understanding technologies, however, estimation errors are inevitable. Songle therefore features an efficient error correction interface that encourages people to contribute by correcting those errors to improve the web service. We also propose a mechanism of collaborative training for music-understanding technologies, in which corrected errors will be used to improve the music-understanding performance through machine learning techniques. We hope Songle will serve as a research platform where other researchers can exhibit results of their music-understanding technologies to jointly promote the popularization of the field of music information research.

Revolutionary music listening experiences helped by music-understanding technologies